This is the last article in this series. This article is about another pre-trained CNN known as the ResNet along with an output visualization parameter known as the confusion matrix.

ResNet

This is also known as a residual network. It has three variations 51,101,151. They used a simple technique to achieve this high number of layers.

Credit – Xiaozhu0429/ Wikimedia Commons / CC-BY-SA-4.0

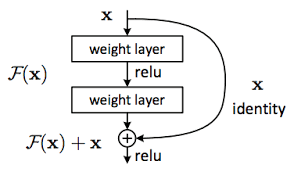

The problem in using many layers is that the input information gets changed in accordance with each layer and subsequently, the information will become completely morphed. So to prevent this, the input information is sent in again like a recurrent for every two steps so that the layers don’t forget the original information. Using this simple technique they achieved about 100+ layers.

ResNet these are the three fundamentals used throughout the network.

(conv1): Conv2d (3, 64, kernel_size= (7, 7), stride= (2, 2), padding= (3, 3))

(relu): ReLU

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1)

These are the layers found within a single bottleneck of the ResNet.

(0): Bottleneck

1 (conv1): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1))

2 (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

3 (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

(relu): ReLU(inplace=True)

Down sampling

Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

(1): Bottleneck

4 (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1))

5 (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

6 (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

(relu): ReLU(inplace=True)

)

(2): Bottleneck

7 (conv1): Conv2d(256, 64, kernel_size=(1, 1), stride=(1, 1))

8 (conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

9 (conv3): Conv2d(64, 256, kernel_size=(1, 1), stride=(1, 1))

(relu): ReLU

There are many bottlenecks like these throughout the network. Hence by this, the ResNet is able to perform well and produce good accuracy. As a matter of fact, the ResNet is the model which won the ImageNet task competition.

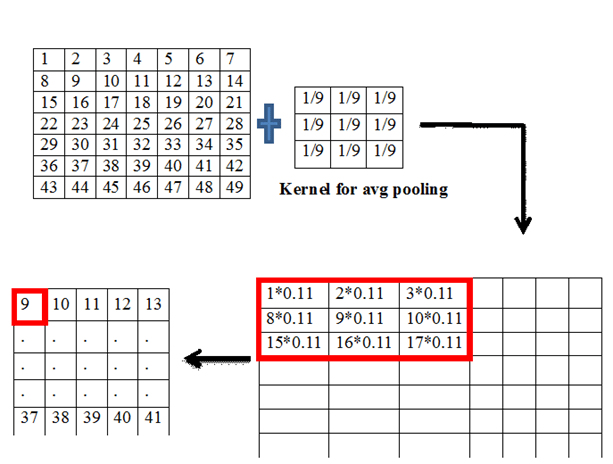

There are 4 layers in this architecture. Each layer has a bottleneck which comprises convolution followed by relu activation function. There are 46 convolutions, 2 pooling, 2 FC layers.

| Type | No of layers |

| 7*7 convolution | 1 |

| 1*1, k=64 + 3*3, k=64+1*1, k=256 convolution | 9 |

| 1*1, k=128+ 3*3, k=128+1*1, k=512 convolution | 10 |

| 1*1, k=256+ 3*3, k=256 + 1*1, k=1024 convolution | 16 |

| 1 * 1, k=512+3 * 3, k=512+1 * 1, k=2048 convolution | 9 |

| Pooling and FC | 4 |

| Total | 50 |

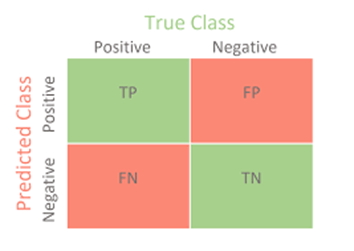

There is a particular aspect apart from the accuracy which is used to evaluate the model, especially in research papers. That method is known as the confusion matrix. It is seen in a lot of places and in the medical field it can be seen in test results. The terms used in the confusion matrix have become popularized in the anti-PCR test for COVID.

The four terms used in a confusion matrix are True Positive, True Negative, and False positive, and false negative. This is known as the confusion matrix.

True positive- both the truth and prediction are positive

True negative- both the truth and prediction are negative

False-positive- the truth is negative but the prediction is positive

False-negative- the truth is positive but the prediction is false

Out of these the false positive is dangerous and has to be ensured that this value is minimal.

We have now come to the end of the series. Hope that you have got some knowledge in this field of science. Deep learning is a very interesting field since we can do a variety of projects using the artificial brain which we have with ourselves. Also, the technology present nowadays makes these implementations so easy. So I recommend all to study and do projects using these concepts. Till then,

You must be logged in to post a comment.