When I mentioned this word there might come two thoughts in your mind according to the height of knowledge you have regarding this topic. The beginners would call it basically a platform used for photo-editing or making posters maybe. But the one who has an ample amount of knowledge in this field would definitely say a lot more amount this. The must sat there are multitudinous virtues whose silhouttes will prove to be a boon for you creativity. I am not considering those who are not interested in this software because if it is so then you must not have opened this article.

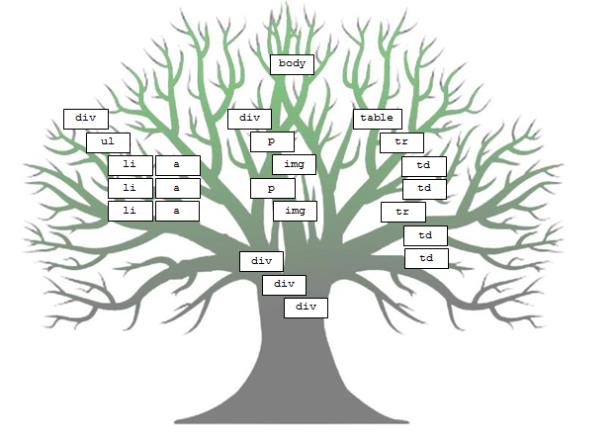

So let’s start with an introduction, a basic introduction. So it is a raster graphic editor developed and published by Adobe for Windows and macOS. Now being a beginner, a very obvious question is what does this uncanny word raster mean? Okay,this means That photoshop is based on the pixels. There are two types graphic files:

- Raster Graphics: These kind of files are based on pixels. You have to design a raster file according to the screen on which it would get displayed. You can’t just design a poster of any size and simply zoom it according to your need because that file will start getting pixelated and ultimately lead to spoil your poster and it’s purpose.

- Vector Graphics: These kind of files are composed of paths and based on mathematics so they can quickly scale more substantial or smaller. This means you can design on any size then simply zoom-in or zoom-out according to your need.

Please don’t judge the photoshop on this basis it has it own virtues. Adobe Photoshop is a vital resource for artistic practitioners such as programmers, web developers, visual artists, photographers. Photoshop is commonly used for uploading images, retouching, designing image templates, mock-ups of websites and incorporating effects. You can edit digital or scanned images for online or in-print use. Inside Photoshop, website templates can be created; their designs can be finished until the developers move on to the coding level. It is possible to create and export stand-alone graphics for use within other programs.

Now for let’s come to the point hoe can you learn photoshop? Adobe Photoshop can be learnt in several ways. Popular methods include taking Photoshop classes in person, taking Photoshop classes live online, learning through online Photoshop tutorials, and Photoshop books. Classes are designed to help the students benefit from both group learning and one-on-one instruction activities. Classroom learning also has the advantage of using guided instruction to help students overcome challenges or obstacles. Such development programs are especially useful when it comes to introducing new apps or resources. The American Graphics Institute in Cambridge, as well as New York City and Philadelphia, provides Photoshop courses.

You-tube is also a very good source and provide you a lot of content that too for free. And what I would recommend is to practice, practice, and practice because Practice makes permanent. Go for more practice than theory because you will learn more by doing things practically than just reading or knowing about them. You have to get your hands dirty with these, this is the only way to master or at least to learn this I would say.

Hope you find this helpful. Happy learning!

/cdn.vox-cdn.com/uploads/chorus_asset/file/11900053/acastro_180730_1777_facial_recognition_0001.jpg)