When browsing the internet and forging, do you ever feel like making various web pages? Ok, if yes, you can probably go to web development. One of the basic skills that almost every technological enthusiast should learn, this skill is one of the most fascinating and easiest. Now, what’s the web development?

Web development refers to building, creating, and maintaining websites. It includes aspects such as web design, web publishing, web programming, and database management.

While the terms “web developer” and “web designer” are often used synonymously, they do not mean the same thing. Technically, a web designer only designs website interfaces using HTML and CSS. A web developer may be involved in designing a website, but may also write web scripts in languages such as PHP and ASP. Additionally, a web developer may help maintain and update a database used by a dynamic website.

Web development includes many types of web content creation. Some examples include hand coding web pages in a text editor, building a website in a program like Dreamweaver, and updating a blog via a blogging website. In recent years, content management systems like WordPress, Drupal, and Joomla have also become a popular means of web development. These tools make it easy for anyone to create and edit their website using a web-based interface.

Web Development has many terms associated with it like front-end. back-end, and full-stack developer. What are they and in which perspective are they used?

front end developer

A front-end developer is a person who is responsible for the looks and design of the website. The design of the site aims to ensure that, when users open the site, they see the information in a format that is easily readable and relevant. This is further complicated by the fact that consumers are now using a vast range of devices of different screen sizes and resolutions, thereby requiring the designer to take these considerations into account when constructing the web. They need to ensure that their site is correctly positioned in different browsers (cross-browser), different operating systems (cross-platform) and different devices (cross-device), which require careful planning on the developer’s side.

The front end section is constructed using some of the languages discussed below:

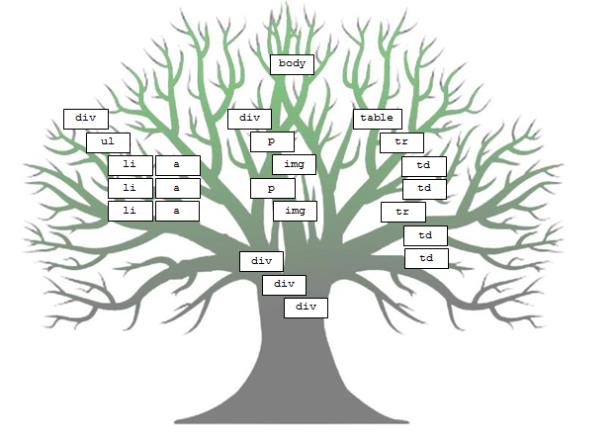

HTML: HTML is the HyperText Markup Language. It is used to build the front end portion of a web page using a markup language. HTML is a mixture of Hypertext and Markup. Hypertext describes a connection between a web page. The markup language is used to define the text documentation within the tag that defines the web page structure.

CSS: Cascading Style Sheets affectionately referred to as CSS is a simple language designed to simplify the process of making web pages presentable. CSS allows you to apply styles to your web pages. More significantly, CSS helps you to do this independent of HTML.

JavaScript: JavaScript is a well-known scripting language used to build magic on blogs that render the web interactive for the user. It is used to improve the functionality of a website to run cool games and web-based applications.

Front End Framework and libraries

AngularJS: AngularJs is a front-end open-source JavaScript platform that is predominantly used to build single-page web applications (SPAs). It is a constantly growing and evolving platform that offers better ways to build web applications. Changes static HTML to dynamic HTML. It is an open-source project that can be freely used and updated by anyone. It extends HTML attributes with Directives, and data is bound with HTML.

React.js: React is a declarative, efficient, and flexible JavaScript library for creating user interfaces. ReactJS is an open-source, component-based front end library responsible for the view layer of the application only. It’s being maintained by Facebook.

Bootstrap: Bootstrap is a free and open-source collection of tools for creating responsive websites and web applications. It is the most popular HTML, CSS, and JavaScript framework for the development of responsive, mobile-first websites.

jQuery: jQuery is an open-source JavaScript library that simplifies the interaction between an HTML / CSS document or, more precisely, a Document Object Model (DOM) and a JavaScript document. Developing terminology, jQuery simplifies HTML document traversing and handling, browser event handling, DOM animations, Ajax interactions, and JavaScript cross-browser creation.

SASS: is the most accurate, mature, and robust CSS extension language. It is used to expand the features of the current site CSS, including everything from variables, inheritance, and nesting to ease.

Certain libraries and frameworks are Semantic-UI, Framework, Materialize, Backbone.js, Express.js, Ember.js, etc.

back-end developer

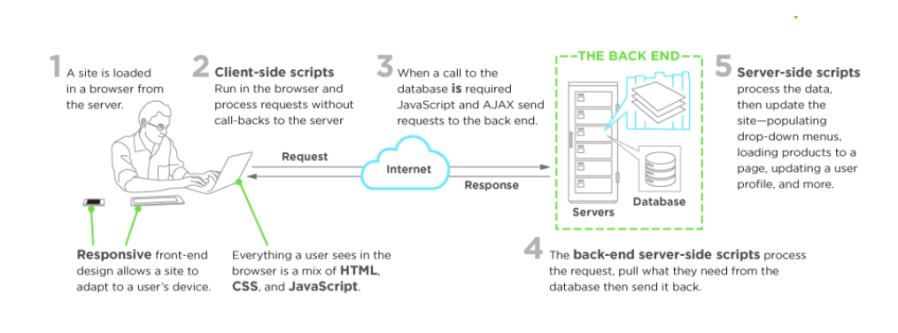

Backend is the server-side of the web. It stores and arranges data, and also ensures that everything on the client-side of the website works fine. It’s the part of the website you can’t see and interact with. It’s the portion of the software that doesn’t come into direct contact with users. Parts and features developed by backend designers are accessed indirectly by users through a front-end application. Activities such as writing APIs, creating libraries, and working with system components without user interfaces or even science programming systems are also included in the backend.

Back-end Languages

The back end component is built using some of the languages discussed below:

PHP: PHP is a server-side scripting language built specifically for web creation. Since PHP code is running on the server-side, it is called the server-side scripting language.

C++: It is a general programming language and is now widely used for competitive programming. It’s also used as a backend script.

Java: Java is one of the most common and widely used programming languages and platforms. It’s very scalable. Java components are readily available.

Python: Python is a programming language that helps you to work quickly and implement systems more efficiently.

JavaScript: Javascript can be used as both (front and back end) programming languages.

Node.js: Node.js is an open-source and cross-platform runtime environment for running JavaScript code outside the browser. You need to remember that NodeJS is not a framework and is not a programming language. Most people are confused and understand that it’s a framework or a programming language. We also use Node.js to create back-end services like Web App or Mobile App APIs. It is used in the development of major corporations such as Paypal, Uber, Netflix, Wallmart, and so on.

Back-end Frameworks

The list of back end frames is Express, Django, Rails, Laravel, Spring, etc.

The other back end programs/scripting languages are: C #, Ruby, REST, GO, etc.

Difference between Frontend and Backend:

Frontend and backend developments are quite different from each other, but there are still two aspects of the same situation. The frontend is what users see and interact with, and backend is how it works.

The frontend is a part of the website that users can see and interact with, such as the graphical user interface ( GUI) and command line, including design, navigation menus, text, pictures, videos, etc. Backend, on the other hand, is where part of the website users are unable to see and communicate.

The visual aspects of the website that users can see and experience are front-end. On the other hand, everything that happens in the background can be attributed to the backend

The languages used for the front end are HTML, CSS, Javascript, while those used for the backend are Java, Ruby, Python, .Net.

full stack developer

A full-stack web developer is a person who can develop both client and server software. Besides mastering HTML and CSS, he/she also knows how to:

Browser software (such as JavaScript, jQuery, Angular, or Vue)

Programming a server (like using PHP, ASP, Python, or Node)

Program a database (such as SQL, SQLite, or MongoDB)

Being a full-stack developer is a good practice because you know almost every aspect of web development. You can switch between front-end and back-end stuff according to the requirement.

Resources to learn

- W3 School(Free)

- Coursera(Paid)

- Udemy(Paid)

- FreeCode Camp(Free)

- Treehouse(Paid)

- Codeacademy(Free)

- Traversy Media(Free)

- HTMLDog(Free)

So, all the best guys for this amazing learning journey, hope you guys find this piece informative.

/cdn.vox-cdn.com/uploads/chorus_asset/file/11900053/acastro_180730_1777_facial_recognition_0001.jpg)

You must be logged in to post a comment.